CoCo4D: Comprehensive and Complex 4D Scene Generation

An anime girl walking in a cherry blossom scene.

In the background, fireworks are bursting. Infront of it, there is a teddy bear dancing slowly within a small area.’.

Abstract

We propose a framework (dubbed as CoCo4D) for generating detailed dynamic 4D scenes from text prompts, with the option to include images. Our method leverages the crucial observation that articulated motion typically charac- terizes foreground objects, whereas background alterations are less pronounced. Consequently, CoCo4D divides 4D scene synthesis into two responsibilities: model- ing the dynamic foreground and creating the evolving background, both directed by a reference motion sequence. Extensive experiments show that CoCo4D achieves comparable or superior performance in 4D scene generation compared to existing methods, demonstrating its effectiveness and efficiency.

Proposed Framework & Method

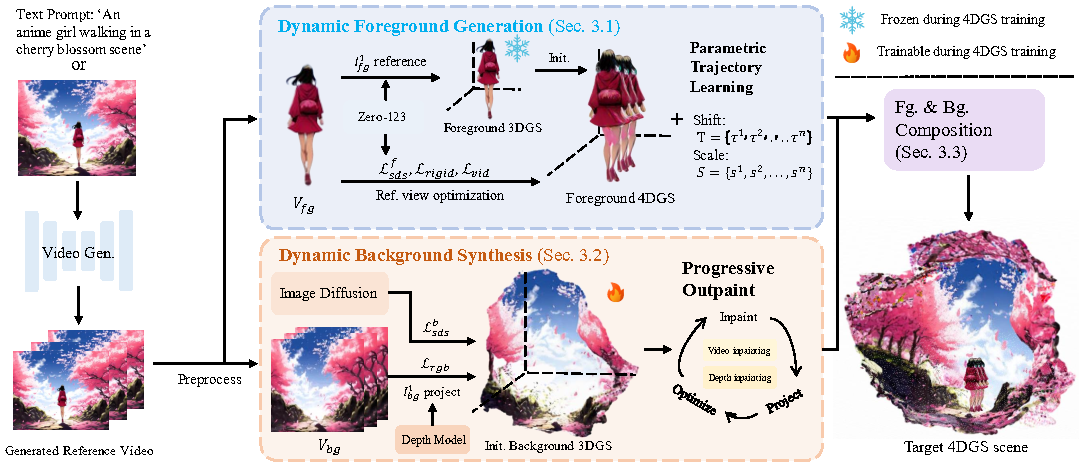

Overview of our CoCo4D. We first generate the reference video (initial motion) for both foreground and background, and disentangle them for separate generation, i.e., Dynamic Foreground Generation (Sec. 3.1 in paper) and Dynamic Background Synthesis (Sec. 3.2 in paper). Finally, we precisely compose these two components (Sec. 3.3 in paper) to form our comprehensive and complex target 4D scene.

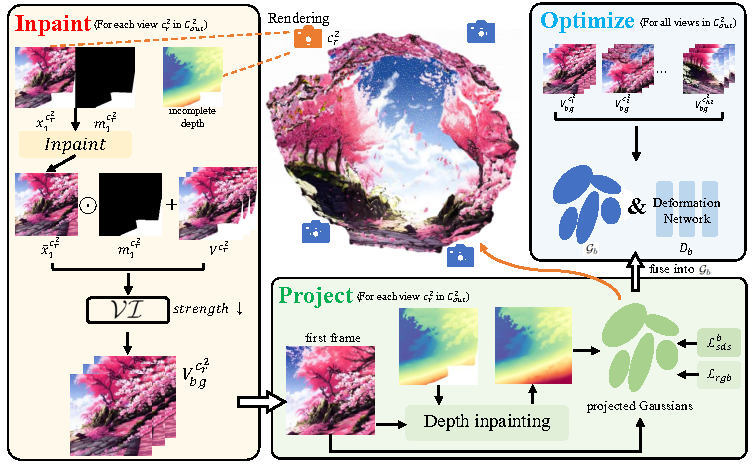

We give a detailed explaination of our progressive outpaint method, which includes an inpaint-project-optimize loop for dynamic background scene expansion.

Video

Additional results and visualizations

In this part, we first present more 4D scenes generated by our CoCo4D.

On the table, a toy rocking horse sways back and forth slowly as it inches forward, flashes of lightning in the background.

The astronaut slowly standing up on a rocky planet, with a bright planet and stars twinkling behind him.

A hermit crab moves slowly and steadily from left to right across a glistening beach.

An adorable hamster lounges leisurely on a floatie, sporting stylish sunglasses, drifting across the sparkling sea as gentle waves ripple around it. The hamster stretches contentedly.

Moreover, we present some dynamic background scenes only to demonstrate the ability of our CoCo4D in generating comprehensive and complex 4D scenes.